How Stripe City's Billboards Made Real-Time Data Feel Alive

How we built a system that processed 2.3 million API calls over 96 hours without a single failure.

For their 2024 Black Friday campaign, Stripe’s marketing team had streamed live transaction data from a custom-built analog-looking machine. It was a hit. For 2025, they wanted to go even bigger.

Together with renowned motion designer John LePore and his studio, Blackbox Infinite, the team at Stripe created Stripe City, a physical love letter to their users in the form of a whimsical miniature hand-built downtown — a physical love letter to their global user community, presented via a live video stream during the whole Black Friday/Cyber Monday shopping weekend.

The city was packed with customer logos, inside jokes, and tiny details; in just one of the model’s five ‘neighborhoods’, you’d find a Stripe Press library, a Crumbl cookie truck, and a figurine of podcaster Dwarkesh Patel chatting with longtime Stripe COO Claire Hughes-Johnson.

And woven throughout the city were nearly a dozen tiny billboards — real-time data displays showing live transaction metrics, styled like Times Square jumbotrons. For this piece, Stripe and BBI brought in Bits&Letters to design and engineer the graphics and backend systems for those billboards, each of which would present a different live metric, set of logos, or a deck of user-generated charts pulled from Stripe’s X mentions.

B&L’s Role and the Real Constraints

Stripe City was a collaboration between several creative teams. John and BlackBox Infinite led the creative direction and motion design, with deep involvement from the team at Stripe Labs. FX WRX built and filmed the physical miniature, with team members’ hands occasionally popping into frame to add new figurines or move stuff around. Bits&Letters’ job was to build the technical layer connecting Stripe’s live data to the eleven tiny displays serving as digital billboards — and to architect that layer so the production team could operate it without needing a developer on set all weekend.

About six weeks before Black Friday, we started working with BBI on the basic outlines for the solution. Stripe would provide a private API endpoint, and we’d transform that data into rendered graphics for each billboard. Unlike a standard website project, this came with some unusual constraints that shaped every decision that followed:

The screens couldn’t fail in front of millions of viewers. If one of the eleven screens froze or showed stale data without a quick and easy way to recover, everyone would see it.

We didn’t know the hardware until late in the game. While we had some ideas for what might work, and advised the BBI and FX WRX teams on what we should use, the exact screen and PC specs were in flux until 10 days before launch, so the web app would need enough performance headroom to run reliably — ideally with crisp graphics and 60 fps animations — on whatever we’d end up with.

No engineers would be on set. While Stripe City was constantly attended by John and members of the FX WRX crew, all talented craftspeople, none of them were developers or IT pros. Therefore, the city’s backend system needed to either fail gracefully or, better yet, never break in the first place.

Creative direction could (and did) shift throughout the project. With only a few weeks from kickoff to launch, we knew from experience that creative direction and requirements would likely stay in flux until almost the last minute. The architecture and systems needed to support creative pivots without taking anything offline.

The thread connecting all of these is a principle we call “headroom:” building simple, solid foundational systems with enough flexibility and resilience to handle surprises like these. Stripe City was unusual in that it was designed to stay up just for a weekend, but it’s the same basic approach we take to any web-based system, because when you think about it, handling data or design changes at the 11th hour is no different from dealing with them on the 11th day or month.

Architecture

Given all the constraints and principles mentioned above, we wanted the architecture for Stripe City’s billboards to be the simplest thing that could get the job done while running unattended for four days.

The final setup had three layers, mixing custom-built software with some off-the-shelf, battle-tested infrastructure:

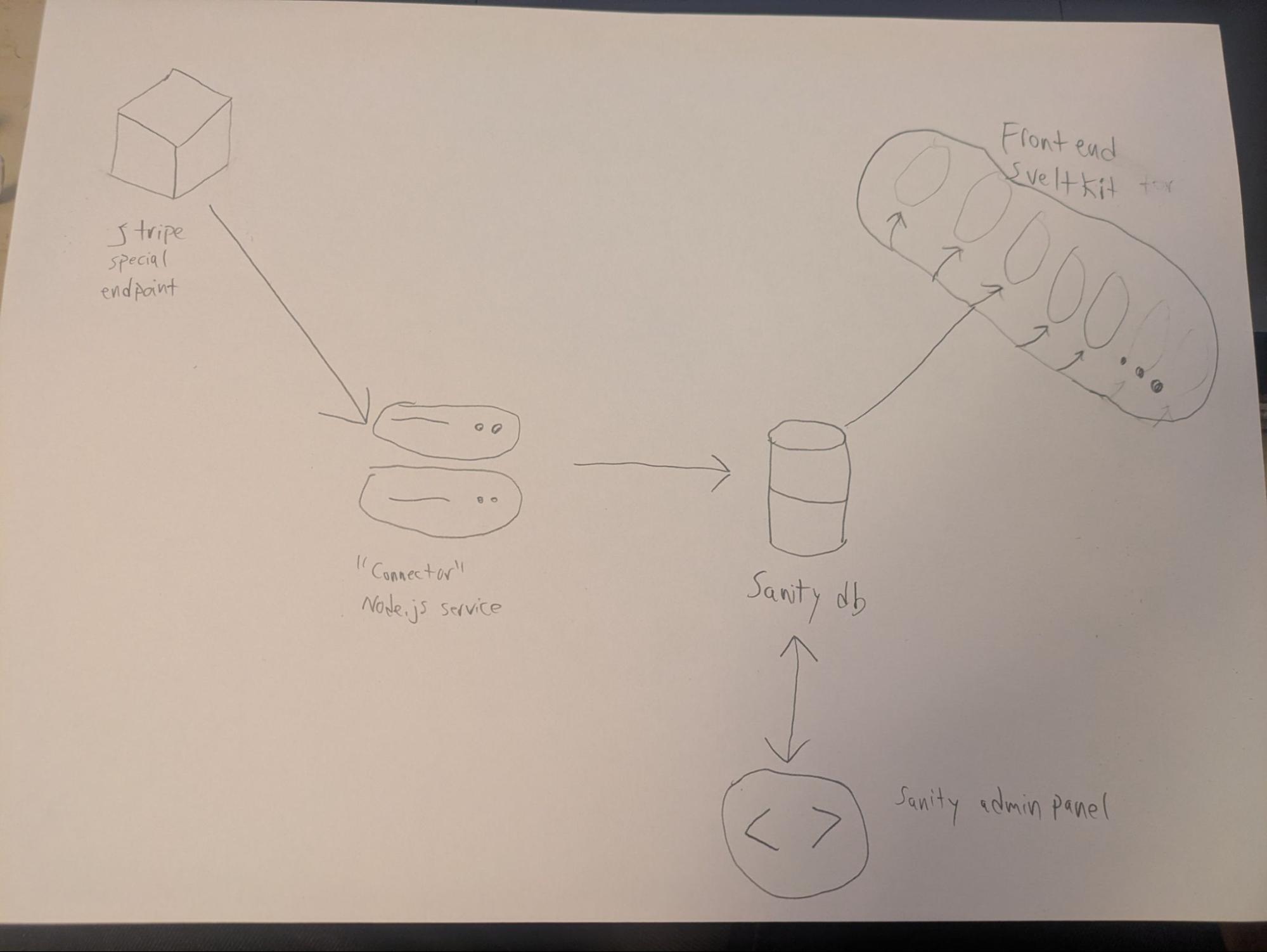

- The Connector was a Node.js server hosted on Render that would poll Stripe’s API every 3–6 seconds and write any updates to a single Sanity document using their JavaScript SDK

- Sanity was the runtime data layer, feeding structured data and image assets to the individual billboards via their excellent, ready-to-use live APIs

- Lastly, each billboard was one page of a single SvelteKit-powered web app. Each page was responsible for taking in raw JSON data from Sanity and rendering whatever jumbotron-style graphic John’s team had designed for that metric, using GSAP for snappy animations.

Why SvelteKit? We typically reach for React and Next.js for projects like this, given their status as the de facto standard framework for rich web apps. However, the short project lifespan and unknown hardware/software stack made SvelteKit a solid choice. Svelte components provide a similarly elegant, reactive approach to data management as React, while being relatively lean in terms of JS payload and memory overhead. In keeping with our ‘headroom’ principle, while a React-based app may have been fine, we didn’t want to risk browser crashes or (more likely) janky animations caused by memory leaks or poorly optimized code. It also helped that SvelteKit is one of Sanity’s best-supported frameworks, which sped up development and reduced risk.

Why Sanity? Sanity’s Content Lake is already built to support heavy traffic; they serve clients like AT&T and SKIMS, holding up under both viral product drops and the annual iPhone launch. Stripe City needed to handle roughly 15,000 API writes per day (over 100,000 across the whole weekend) and propagate those changes to the displays as close to instantly as possible, all with a near-zero failure rate. Many web apps have significantly higher API hit rates than this, but again, headroom — Sanity’s enterprise-grade scale and reliability gave us confidence that latency and uptime would be handled, allowing our team to focus on getting the experience right.

Using Sanity also allowed some operational tasks — such as uploading new client logos or fixing images that looked fine on our machines but appeared washed out on the livestream — to be handled in the Studio editing interface by other members of the creative team, so changes could roll out quickly without calling in a developer.

Why Render? We usually don’t think too hard about where websites like this are hosted; most public-facing sites are deployed to serverless platforms like Vercel (our typical go-to) or Netlify, and they all tend to do a good job. On this project, however, the Connector polling service needed to run 24/7 on a regular old server, while working well in our typical build-and-deploy workflows. Render was a good choice because it offers both conventional servers and static website hosting, both with continuous integration, great observability, and solid performance.

When “Real-Time” Isn’t Fast Enough

For the “hero” number — overall transaction volume, displayed on the tallest downtown building in Stripe City — we wanted to show continuous growth, with numbers spinning at 60 FPS to indicate constant energy and activity on the busiest retail weekend of the year. But our real-time data only arrived every few seconds, so how could we create a sense of continuous movement and make it feel alive?

Between live updates, we animated the number upward. But we calculated the ‘steps’ between updates by looking at the difference between the last two real data points to interpolate between “real” updates from the Stripe API. If transactions accelerated, the animation sped up to match; if they slowed, so would the display. The number displayed at any given moment wasn’t necessarily the “real” current state of a metric, but the animation was based on real data, acting as more of a smoothing function rather than a prediction.

On one hand, these big numbers were — legitimately — moving up so fast it would have been nearly impossible for anyone to notice an inaccuracy. But Stripe is serious about data, as are we, so it was important to ensure that even these wildly spinning numbers were based in truth.

The Long Weekend

The system ran continuously for 96 hours. 2.3 million API calls processed, zero failures. The on-site production team never had to think about the billboards — they could focus on switching out dioramas and managing the livestream, while the data stayed fresh and the screens kept running.

But the true measure of success was the response. Stripe City was a love letter to Stripe’s user community; the users received that letter, loved it, and retweeted it. Partner companies started posting about the installation, like saying “look, ma! I’m on TV!” but to their entire user base. What could have been just a marketing campaign for one brand became a shared moment on social media (especially X), as both large companies and individual entrepreneurs pointed out in-jokes and logos on both the billboards and the miniature city as a whole.

The billboards played a specific role in that, grounding a celebration of Stripe’s culture and community with real data shown in real time, proving this wasn’t just a charming model but a window into what was happening on Stripe’s platform right now. Having the billboards there and working as seamlessly as they did made the whole installation feel more real and even more generous.

Our billboards blended into the cityscape, which was exactly the point. If the data had been wrong or the animations janky, the screens would have stood out for the wrong reasons. Instead, they felt like a natural part of the world, letting viewers focus on the details that made Stripe City special.

What we brought home from our trip to Stripe City

Our work on this project reinforced a principle we keep coming back to: build for the people who’ll operate the work, not just the people who’ll see it.

The billboards were seen by millions of viewers on the livestream, but the architecture behind them was deeply informed by the needs of the production crew and design team who’d be operating the City all weekend, who needed its systems to not just work but stay responsive to last-minute additions and tweaks without needing a team of software engineers on standby.

Beyond that, Stripe City exemplified the fact that websites are experiences, not just brochures, and while here those experiences played out over a single weekend, in the real world, they’ll handle months or years of uptime. Smart web design and architecture, therefore, looks beyond the immediate creative brief to what the requirements will be tomorrow or the next day.

When we got those right, everything else followed — the reliability, the flexibility, and the invisible technical craft that let a tiny handmade city feel alive.